The Geographical magazine, which is the official magazine of the Royal Geographical Society, run an article about "the future of mapping". The piece was written by Katie Burton, and it covers a range of recent developments in mapping technologies. I was interviewed for the piece, and provided information about our work with Sapelli, and also comments … Continue reading Geographical magazine: The Future of Mapping

Category: GIS Usability

Citizen Science 2019: Designing technology to maximize cultural diversity, uptake, and outcomes of citizen science

This blog post was written by Michelle Neil of ACSA with edits by me (yay! collaborative note taking!) (apologies for getting names wrong!) The session was structured in the following way: first, each person presented their issue, and then they answer questions that were presented by other panel members. The questions that we managed … Continue reading Citizen Science 2019: Designing technology to maximize cultural diversity, uptake, and outcomes of citizen science

Papers from PPGIS 2017 meeting: state of the art and examples from Poland and the Czech Republic

About a year ago, the Adam Mickiewicz University in Poznań, Poland, hosted the PPGIS 2017 workshop (here are my notes from the first day and the second day). Today, four papers from the workshop were published in the journal Quaestiones Geographicae which was established in 1974 as an annual journal of the Faculty of Geographical and Geological … Continue reading Papers from PPGIS 2017 meeting: state of the art and examples from Poland and the Czech Republic

Developing mobile applications for environmental and biodiversity citizen science: considerations and recommendations

The first outcome of the December 2016 workshop on apps, platforms, and portals for citizen science projects was the open access paper "Defining principles for mobile apps and platforms development in citizen science", which came out in October 2017. The workshop, which was organised by Soledad Luna and Ulrike Sturm from the Berlin Museum for Natural History, has … Continue reading Developing mobile applications for environmental and biodiversity citizen science: considerations and recommendations

Justice and the Digital symposium notes

The Digital Geographies Research Group of the RGS-IBG held the annual symposium at the University of Sheffield, under the theme “Justice and the Digital”. These are partial notes from the day The symposium opening session focus on the important question "What's Justice got to do with it?" Jeremy Crampton covered three issues - practices of surveillance in the context … Continue reading Justice and the Digital symposium notes

Identifying success factors in crowdsourced geographic information use in government

A few weeks ago, the Global Facility for Disaster Reduction and Recovery (GFDRR), published an update for the report from 2014 on the use of crowdsourced geographic information in government. The 2014 report was very successful - it has been downloaded almost 1,800 times from 41 countries around the world in about 3 years (with more … Continue reading Identifying success factors in crowdsourced geographic information use in government

Lessons learned from Volunteers Interactions with Geographic Citizen Science – Afternoon session

The context of the workshop and the notes from the first part of the workshop is available here. The theme of the second part of the day was Interacting with geographical citizen science: lessons learned from urban environments Volunteer interactions with flood crowdsourcing platforms - Avi Baruch talk is based on a completed PhD on the aspects of volunteers … Continue reading Lessons learned from Volunteers Interactions with Geographic Citizen Science – Afternoon session

Lessons learned from Volunteers Interactions with Geographic Citizen Science – Morning session

On the 27th April, UCL hosted a workshop on the "Lessons learned from Volunteers Interactions with Geographic Citizen Science". The workshop description was as follows: "A decade ago, in 2007, Michael Goodchild defined volunteered geographic information (VGI) as ‘the widespread engagement of large numbers of private citizens, often with little in the way of formal … Continue reading Lessons learned from Volunteers Interactions with Geographic Citizen Science – Morning session

Citizen Science & Scientific Crowdsourcing – week 2 – Google Local Guides

The first week of the "Introduction to Citizen Science and Scientific Crowdsourcing" course was dedicated to an introduction to the field of citizen science using the history, examples and typologies to demonstrate the breadth of the field. The second week was dedicated to the second half of the course name - crowdsourcing in general, and its … Continue reading Citizen Science & Scientific Crowdsourcing – week 2 – Google Local Guides

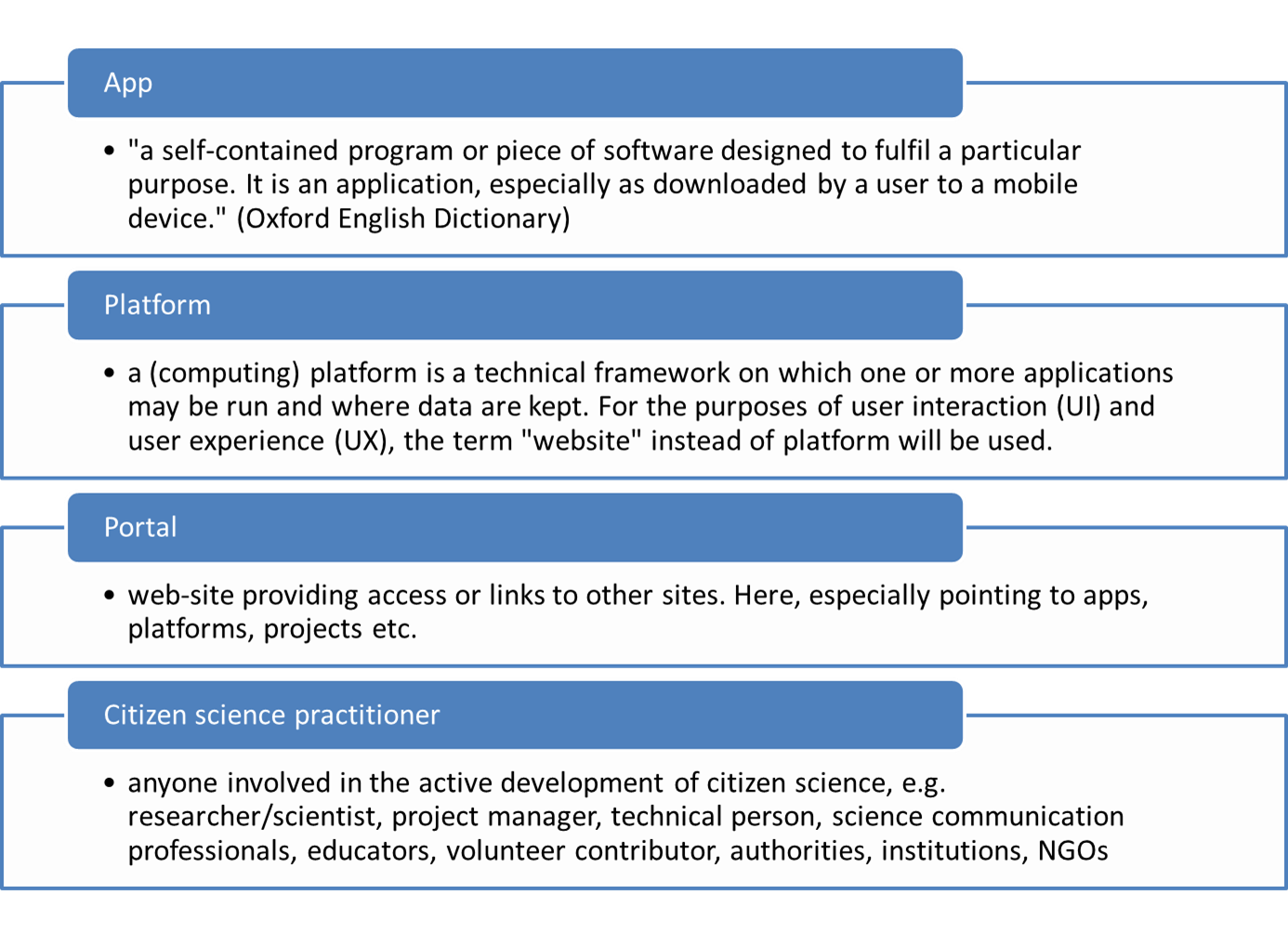

Defining principles for mobile apps and platforms development in citizen science

In December 2016, ECSA and the Natural History Museum in Berlin organised a workshop on analysing apps, platforms, and portals for citizen science projects. Now, the report from the workshop with an addition from a second workshop that was held in April 2017 has evolved into an open peer review paper on RIO Journal. The … Continue reading Defining principles for mobile apps and platforms development in citizen science